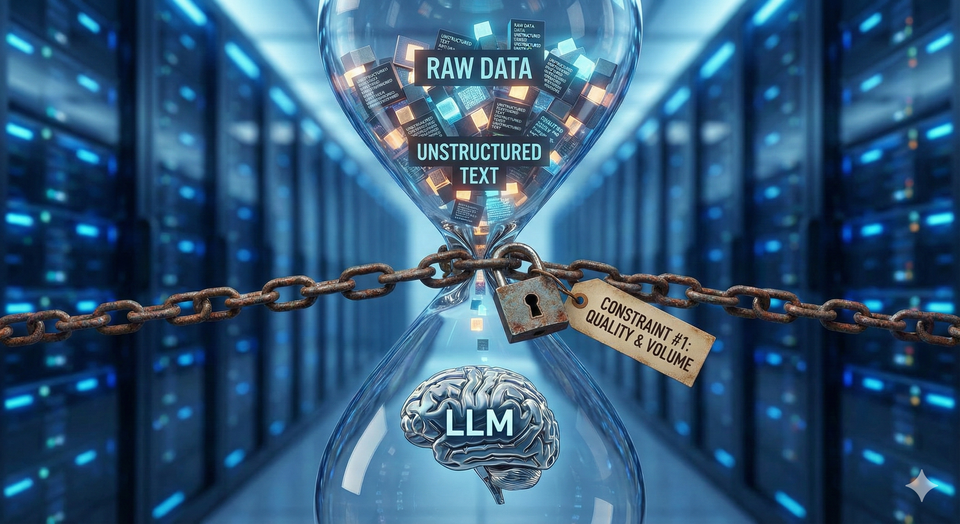

Data. The first constraint

Do you know why we have so many new models for English or Spanish or Mandarin.

It's the data.

By now, the training recipes, models, architectures which work etc are all open source and well understood. We just need to feed the data.

So when me and my team started to build the Telugu LLM we thought, ok great, all we need to do is feed it data. Lets find the Telugu data online and train it and we are done.

Imagine our shock when we found out how little Telugu data was there online.

Telugu is one of the world's most significant and fastest-growing languages, holding a prestigious status as a "Classical Language" of India.

Global & National Standing (2025)

- Global Rank: Approximately the 14th to 18th most spoken native language in the world.

- India Rank: The 4th most spoken language in India, following Hindi, Bengali, and Marathi.

- Total Speakers: Estimated at roughly 96 million (with nearly 82–83 million native speakers and ~13 million second-language speakers).

We have a great culture and a big film industry and a history of great poets and great written works. So we assumed we will find all the data we want online and we just need to train it.

But in the world of AI and Big Data, Telugu is considered a low-resource language despite its massive population. While Spanish and French have trillions of tokens available, Telugu’s digital footprint is significantly smaller, though growing rapidly.

As of late 2025, here is the breakdown of Telugu's presence in major online text datasets:

1. AI Training Datasets (Tokens)

In the context of Large Language Models (LLMs), "tokens" represent the chunks of text used for training.

- The Gap: Global models like Llama 3 are trained on 15 trillion tokens, but Telugu makes up less than 0.02% of the standard web-crawl data (Common Crawl).

- High-Quality Corpus: Projects like IndicCorp v2 (by AI4Bharat) have curated roughly 20 billion tokens across 22 Indian languages. For Telugu specifically, the high-quality, cleaned text available for researchers is estimated at around 1 to 2 billion tokens.

There is literally no data for training an LLM.

Biswajit and team scoured the Internet to find open source data we can use for the training. We finally ended up with this list:

| Dataset | Words (Million) | Gemma Tokens (Million) | Source |

|---|---|---|---|

| newsarticles-navatelangana-swecha | 89 | 85 | Viswam |

| newsarticles-prajasakti-swecha | 63 | 60 | Viswam |

| tewiki-latest-pages-articles | 183 | 233 | Viswam |

| sangraha-te | 5,340 | 5,409 | sangraha |

| fineweb-2-te | 2,178 | 2,136 | fineweb-2 |

| HPLT2.0_cleaned-te | 2,372 | 2,450 | HPLT2.0_cleaned |

| book_data_hf | 41 | 57 | Viswam |

| CulturaX-te | 1,895 | 1,906 | CulturaX |

| Total | ~12,161 | ~12,336 | All |

Note: For multilingual datasets, only the Telugu portion was extracted and used. Since we are targeting to build a Telugu-only model, we have considered only Telugu data from each dataset.

That's it. Thats all the tokens on the open web. If anyone knows of some other sources, please comment or reach out so we can add to this list.